Computer simulation is a fundamental tool to aid the development and study

of robotic systems. Existing simulation packages for multi-robot systems do not

provide the flexibility, scalability, and computational efficiency necessary to

deal with the challenges posed by the Swarmanoid project. Therefore, we have

designed and implemented the ARGoS, a novel continuous-time simulator for general multi-robot

simulation that includes full support for the simulation of the three different

types of robots-- eye-bots, hand-bots and foot-bots--composing the swarmanoid.

The simulator has been designed to provide the following core properties and

functionalities to the user:

- Modeling of all the components of the swarmanoid and of the environment

according to multiple, selectable, levels of physical detail.

- Computational efficiency, to allow running simulations of complex indoor

real-world scenarios including relatively large numbers of robots, and to

ease the use of computationally-demanding algorithms for learning and

control.

- Effective monitoring and visualization of the activities and performance

of the single robots and of the entire swarmanoid.

- Transparent migration of robot control code from the simulator to the

real robots.

- High modularity, to facilitate reuse and integration of available

software modules, permit independent code development from different

contributors, and ease both the static and dynamic addition and removal of

components and functionalities such as sensors, actuators, controllers,

physics engines, and visualization modalities.

General characteristics

ARGoS is a discrete-time simulator for multi-robot systems acting in 3D

spaces. The main code is entirely written in C++ and is all based on the use of

free software libraries.

Each robot, and, more generally, each entity active in the 3D space, is a seen

as composition of a physical structure, a set of sensors and actuators, and

a controller module. The simulator includes an extensive set of sensors

and actuators, and in particular all those necessary to allow the realistic

simulation of the three types of robots composing the swarmanoid.

The simulator also includes multiple physics engines, that allow dealing

with the simulation of the physics of movements and collisions according to

different levels of realism and computational effort. During the same

simulation run the physics of different groups of robots can be handled by

different physics engines.

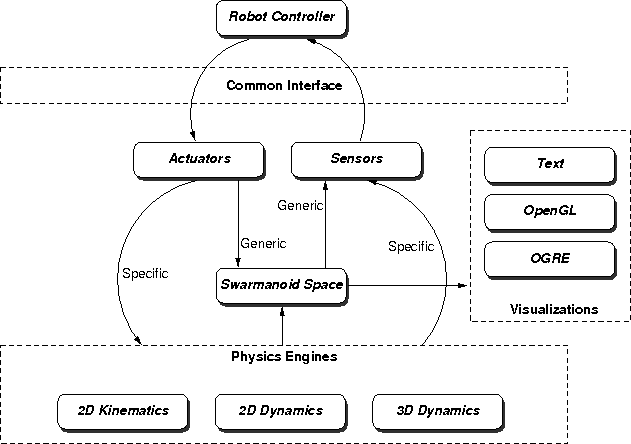

Figure 1 shows the general architectural characteristics of ARGoS concerning the

simulation of a single robot.

Figure 1

The

Swarmanoid Space is the 3D arena where the robots and all the

other simulated entities (e.g., objects such as bookshelves and tables, or

active entities such as light emitters) live and act. It is implemented as a

scene

graph.

Sensor and actuators can be "specific", in the sense that they

depend on the specific characteristics of the physics engine (see discussion

below), or "generic", in the sense that they are independent of the physics

engine and relates only to the general 3D coordinate system of the Swarmanoid

Space.

The Common Interface is an abstraction layer that allows the robot

controller to access the robot on-board devices irrespective of the fact that

the controller is running on a simulated or a real robot. This means that the

same controller code can be transparently compiled for running on a simulated

and on a real platform.

Input description of the scenario and visual output

The

simulation scenario describing all the characteristics of the

environment, of the robots, and of the other entities, is given in input to the

simulator in the form of an

XML file using a quite self-explanatory

syntax. A simple example scenario including two

foot-bots and one physics engine is given in this

XML scenario description file.

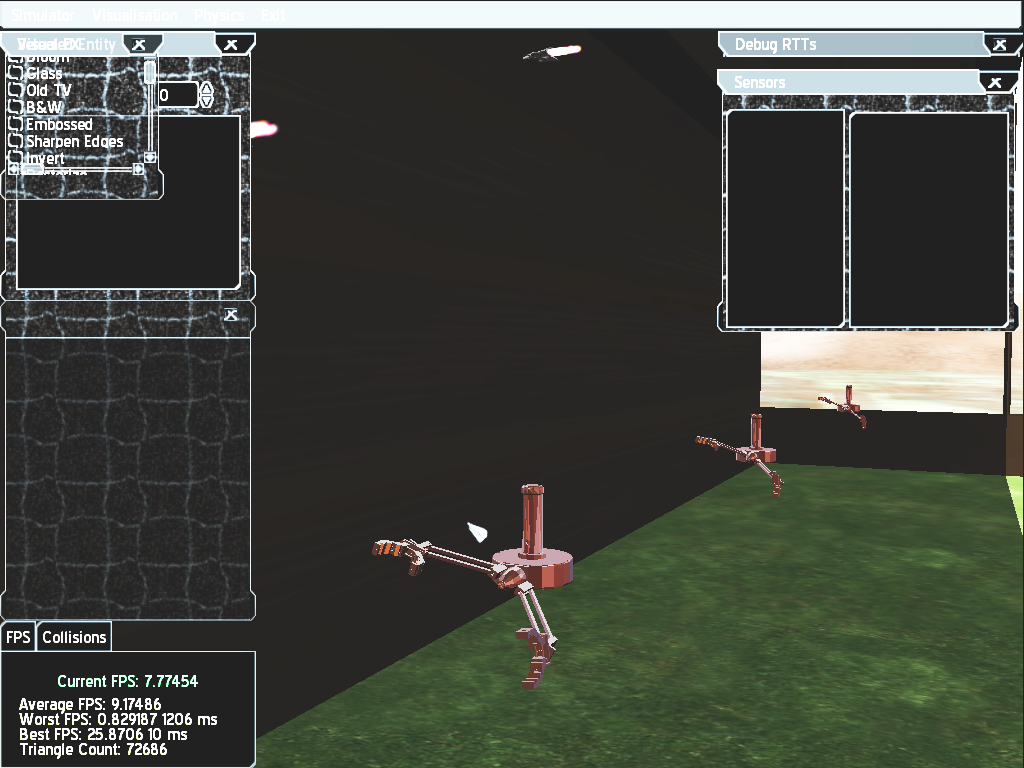

The Simulator design includes also multiple levels of textual tracing

of the activities of the robots, as well as graphical visualization

based on the use of popular open source three-dimensional graphical renderers

such as 3D OpenGL and Ogre. Figure 2 shows an output frame

using OpenGL of a simulation example where foot-bots and eye-bots are tracking

"prey" entities (the text is added just to clarify the different roles). Figure

3 includes two output frames using Ogre showing respectively foot-bots (left)

and hand-bots (right). Clinking on the images it shows they enlarged

version.

Figure 2

Figure 3

Multiple physics engines and their concurrent use

The simulator includes the definition of

multiple physics engines to

handle the calculations of the physical kinematics and dynamics of the robots

and the collisions happening among the physical entities in the simulation

arena.

The presence of multiple physics engines gives to the user the possibility to

model the different robots and their physical interaction according to the

desired level of physical precision and, accordingly, of allocated

computational resources. A simulation run can include the concurrent use of

one or more physics engines, with each engine devoted to model with the desired

level of detail the Newtonian physics for the objects falling within a specific

subspace of the 3D Swarmanoid Space. Each subspace managed by each different

physics engine can be a 1D, 2D, or 3D subspace (our 3D physics engines are

based on the use of the ODE libraries, while the other engines are based on custom implementations). In practice, each

engine independently lets the robots assigned to it acting in a local

coordinate system specific to the engine subspace. A geometrical transformation

maps the local coordinates into the reference ones of the common Swarmanoid

Space. In this way, each robot, even if for instance is physically simulated as

living in a 2D subspace, has anyway access to the full 3D representation of the

arena to consistently communicate with and sense the other robots and

entities.

These characteristics are unique features of ARGoS. From one hand, the

presence of multiple physics engines, provides a to the user a range of

selectable levels of physical precision. From the other hand, the fact that

multiple physics engines can run concurrently taking care of different parts of

the simulation allows to optimize the usage of computational resources,

allocating more resource (i.e., precision) only where it is more

necessary.

For instance, if the focus of an experiments is on eye-bots' behavior, and the

foot-bots are supposed to be rather passive, then, physics calculations on the

entire ground level and for all the foot-bots on it can be dealt with

considering a simplified 2D representation. All the physical interactions on the

ground can be managed by a simple physics engine based on a 2D geometry that

considers only the simulation of the kinematics and not of the dynamics of the

foot-bots.

Figure 4 shows a sort of extreme situation where the simulation in the 3D

Swarmanoid Space is actually managed by three different physics engines

working on different 2D orthogonal subspaces. In this case, in which precision

is definitely sacrificed for sake of computational optimization, the foot-bots

are approximated as 2D entities on the ground, the eye-bots are approximated as

2D entities moving on a horizontal plane located at a certain height from the

ground, and the hand-bots are approximated as 2D entities acting on the wall.

Figure 4

Sensors and actuators

The simulator provides a number of sensors and actuators that can be attached

to a controller to produce a realistic of simulation of a foot-bot, eye-bot, or

hand-bot, or to create any new virtual entity to be used during the

simulation.

Each sensor or actuator is either "specific" for the characteristics of a

physics engines (e.g., a camera sensor to be used inside a 2D physics engine

implements a camera returning a mono-dimensional array of pixels, while a

camera sensor for a 3D subspace returns a 2D pixel array), or "generic", if it

is always related to the global 3D structure of the Swarmanoid Space.

In both cases, the controllers access sensors and actuators trough the Common

Interface abstraction layer that makes transparent to the controller the fact

that it is acting on a simulated or on a real robots. This important feature

makes it possible to directly port on the real robots the controller code

debugged and tested in simulation.

A list (incomplete) of the main sensors and actuators currently implemented

and/or already scheduled for implementations is the following:

- wheel actuator,

- IR proximity sensor,

- gripper actuator,

- joint encoder,

- force-torque sensor,

- light sensor,

- IR range and bearing sensor,

- microphone and sound detector,

- inclinometer,

- omni-directional camera,

- linear camera,

- wi-fi transmitter and receiver,

- laser beam actuator

- rotor blades actuator

- ...